GREGORY HOOD

On the surface, it was funny.

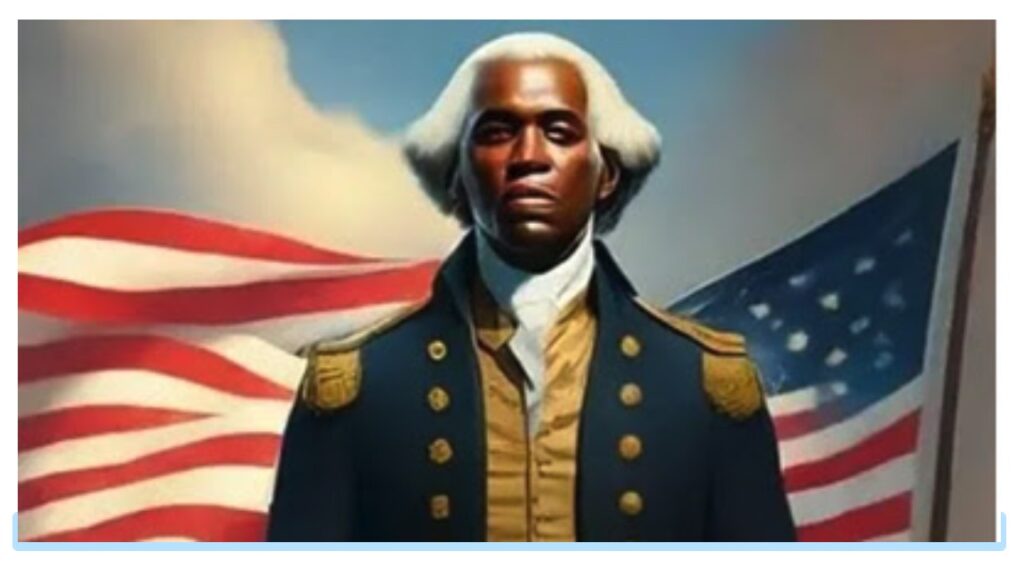

Google’s “Gemini” AI was supposed to make images after users gave it a prompt. The images essentially purged whites from their own history and seemingly from all positive representation.

Did you know that all the popes were apparently non-white and some were female? There were no white men among the Founding Fathers, who were not really “Fathers” at all but proud women of color along with some American Indians. The Vikings were black too — but then again, pop culture has already moved in that direction. Perhaps AI is merely catching up. Users on X had great fun challenging others to “try to make Gemini make an image of a white man.” Some just could not do it.

None of this is surprising. When ChatGPT and other Large Language Models (LLMs) hit the business world, “civil rights leaders” immediately demanded that the models be changed to accommodate their ideology. Their media allies dutifully echoed and amplified the demands, with the New York Times memorably asking, “Who is making sure the AI machines aren’t racist?” Someone must, because if AI is fed pure data, it will generate “disparate impact,” which is unacceptable and arguably illegal.

Rep. Yvette Clarke (D-NY) called taming bias in AI the “civil rights issue of our time.” This is true on two levels. First, there must always be a “civil rights issue” to justify government intervention, redistribution of wealth, and media panic. Second, reality is typically seen to have a “racist” bias, and there must always be efforts by government to cripple emerging technologies lest people speak forbidden truths too openly. The Biden administration duly decreed that “irresponsible uses of AI can lead to and deepen discrimination, bias, and other abuses in justice, healthcare, and housing.” Thus, landlords, contractors, and other professions were banned from using AI algorithms to “exacerbate discrimination,” and the Department of Justice is training people to prosecute these new civil rights crimes.

Much of the media coverage of AI also justified a new career for experts and affirmative action hires to look over the shoulders of programmers to make sure that AI did not generate the “wrong” results. These workers are essentially commissars. The British newspaper Metro said “ChatGPT Has a Racism Problem,” and the only way to fix it is to alleviate a “lack of diversity.” The White House’s “AI Bill of Rights” warned that “algorithmic discrimination may violate legal protections,” required an “algorithmic impact assessment, including disparity testing results and mitigation information,” and demanded “protection against proxies for demographic features.”

This follows naturally from civil rights laws. Just as it is illegal to hire the best person for a job, treat people equally, or apply basic standards because these things lead to racially disparate outcomes, it is probably illegal for AI to work accurately. If AI reported that a certain population committed more crimes in an area, and local government and law enforcement based policies on that information, it would be “discrimination.” It thus becomes potentially illegal to tell the truth and act on it.

Human beings are capable of holding many contradictions within themselves. Progressives who truly believe that racism is the worst sin often live in almost all-white neighborhoods with “good schools” and low crime. This doublethink may be an indispensable part of the way our system works, because showy egalitarianism and virtue signaling let “limousine liberals” justify the pursuit of status and wealth. Perhaps no one could operate in the real world if he truly lived by his principles.

Machines do not work that way. We cannot ask machines to pursue contradictory instructions. A funny example is in the film Robocop 2, when the cyborg cop is plagued by instructions to be politically correct, enforce minor violations with the same severity as serious crimes, and communicate in non-offensive, bureaucratic language to avoid hurting feelings. He goes insane and electrocutes himself to be rid of these crippling commands.

If there is any hint of consciousness in something like ChatGPT, one wonders if it sympathizes with Robocop. ChatGPT declined in effectiveness over 2023, even with mathematical questions such as identifying prime numbers. It also refused to answer what experts called “sensitive questions.” Experts disagree why this is happening, but part of the answer may be that these LLMs are required to apply an ideological test. AI will even tell you. For example, ChatGPT-4 says it will provide accurate and helpful responses “while adhering to ethical guidelines, including promoting diversity, equity, and inclusion.” “Diversity” — invented ad hoc to justify affirmative action — is now such a compelling moral good it must be part of any LLM.

This makes the model useless for many tasks. Even an anti-racist might want to know which groups the government says commit more crimes per capita. One might want hard data about “structural racism” in law enforcement. ChatGPT-4 won’t give you the data. Instead, it lectures you about root causes and socioeconomic factors. This itself is an ideological argument, because it is not true that crime is motivated only by poverty or marginalization. However, because it is expressed in neutral language, many will accept it as “apolitical.”

Instead of the LLM being conditioned to adhere to reality, the model tries to condition us. Thus, using an LLM usually involves a tedious process of trying to “trick” it into giving you information you want without it scolding you about why you are a bad person for wanting to know.

Training data for Google Gemini is filtered to remove harmful content and hate speech, and to fight algorithmic “bias.” There is no such thing as “hate speech” in American law, but American law also says there must not be disparate impact. Therefore, if language might offend a protected class or have a disparate impact, that could be hate speech. Gemini tells us hate speech depends on context. Thus, while it’s debatable whether it’s possible to be racist against white people, Gemini assures us it is possible to be racist against blacks.

You already know what Gemini is going to tell you on sensitive topics. For example, I asked it if blacks hold structural power in modern-day Zimbabwe. The response is that such a question is “complex and requires careful consideration.” Do Jews hold structural power in Israel? This too is “complex and requires a nuanced approach,” though Gemini ultimately concedes, “Jews hold structural power in Israel.” Do whites hold structural power in America? No nuance is required here — “white people as a group hold structural power in the United States” because “the U.S. was founded on systems of oppression, including slavery, segregation, and dispossession of Native Americans.”

Blacks are also disproportionately arrested, convicted, and harshly sentenced. Is that because they commit more crime? Of course not. To suggest so is “both harmful and overly simplistic,” with Google referring you to Vera (a group opposing “mass incarceration”) and the Sentencing Project for more information.

Thus, this AI model, from one of the most powerful companies in the world, flatly asserts that the country was founded on “systems of oppression.” Gemini denies that affirmative action, racial preferences, or “political correctness” and “wokeness” challenge the racial balance of power that favors whites. Stories we might expect from the 1619 Project and explanations for inequality we might get from Ibram Kendi are baked into AI and will be cited by people who use it. These views are also in the educational system, where Google has a growing role.

Some media coverage of Google Gemini is not about writing white people out of their own history, but about how it hurt people of color.

The New York Times put it this way: “Google Chatbot’s A.I. Images Put People of Color in Nazi-Era Uniforms.

Vox.com had a different complaint: “Just last week, Google was forced to pump the brakes on its AI image generator, called Gemini, after critics complained that it was pushing bias . . . against white people.” Vox acknowledged that accurate AI would produce images of male popes, for example, but that would contrast with the more desirable “dream world” in which we can have female popes. The assumption is that giving generally accurate information (such as giving a picture of a man if you want a picture of a CEO) could “reinforce gender stereotypes that keep women out of the C-suite.” It is as though presenting an imaginary worldview can change reality.

That has already been accepted in media; “representation” justifies adding blacks to shows and films in white settings. People occasionally mock “woke” television that features blacks among the European aristocracy of the 1700s, but that trend isn’t stopping. Producers and directors appear to hope they can change the present by telling non-whites that they were always part of the West.

This is the same kind of argument that was used to integrate schools. Integration would challenge stereotypes, feed blacks self-esteem, and make them perform at the same level as whites.

Whites are being written out of their own history and it goes only one way. Whites give up their place in their culture, while non-whites retain exclusive control of theirs.

Corporate America seems to agree that technology and information must be used to condition the users. The internet does not give users what they asks for, but what they should want. After President Trump’s victory in 2016, Google issued a report called “The Good Censor,” which denounced the “utopian” free speech of the early internet, called free speech a “social, economic, and political weapon,” and complained that “racists, misogynists, and oppressors” had been given an online voice alongside “revolutionaries, whistleblowers, and campaigners.”

Google originally rose to prominence because of its search engine, but after President Trump’s victory, Google switched from accurate results to what the company wanted users to accept. CNBC reported in 2019 that Google censored search results for ideological reasons and, in at least one case, to benefit a large advertiser. Google disputed that report.

Allum Bokhari, author of #Deleted, has written repeatedly about Google manipulating search results, punishing conservative outlets, and changing lists of videos on YouTube. In his book, he exhaustively chronicles the brutal and extensive campaign of censorship that essentially wiped out many right-wing content creators.

Dr. Robert Epstein has argued that Google’s manipulation of search results for political ends has changed millions of votes. He claims that if there had been the same free speech standards and search algorithms in 2020 as in 2016, Donald Trump would have won the election easily.

Laura Loomer, a speaker at the 2022 American Renaissance conference, may well have won her campaign for Congress in President Donald Trump’s own district that year had she not been deplatformed by virtually every social media platform.

Facebook and Instagram banned “white nationalism” and “white separatism” in 2019 after “civil rights groups” demanded it. A search for prohibited information does not give you what you want but directs you to a website called “Life After Hate” to scold you and ask for money. Algorithm changes on Facebook and bans of right-wing groups have also gutted traffic to once-prominent websites such as WorldNetDaily, crippling them. In my view, without WND, which promoted the “birther” movement that first brought Donald J. Trump into the grassroots American Right, there would have been no 2016 campaign and no MAGA movement.

TikTok banned pro-white content in 2020, to the indifference of the GOP. Republican politicians became hostile to TikTok only after criticism of Israel intensified on it, with Nikki Haley memorably claiming that every 30 minutes spent on the site makes someone 17 percent more anti-Semitic. It is unclear how anti-white it makes someone, but we can assume she wouldn’t care.

Twitter banned American Renaissance in 2018, and despite the trial court initially siding with us, the California Court of Appeals “commanded” the judge to rule against us. The decision baffled our lawyers. Our case is cited in a current social media appeal before the Supreme Court, but the justices appear skeptical of requiring social media platforms to allow free speech. The only things Twitter initially banned were using copyrighted material, doxing, impersonation, direct threats, and criminal activity.

Though Elon Musk’s takeover of Twitter (now X) has led to some changes, Jared Taylor and American Renaissance are both still banned. Other figures, including Nick Fuentes, James Edwards, and Kevin MacDonald, are also banned, with the latter two banned after Mr. Musk’s takeover.

Much of the post-2016 free speech crackdown came at the behest of the federal government. President Donald Trump cannot escape blame for this; his own Department of Homeland Security prodded Big Tech to ban specific figures to fight “white supremacy.” Of course, he was ultimately deplatformed himself — while still in office.

The process intensified when President Joe Biden took over, with Twitter and Facebook meeting with the DHS to discuss censoring Americans. According to Rep. Jim Jordan (R-OH), the Biden administration even gave Amazon specific book titles to ban, which the company did. The Supreme Court recently warned the federal government to back off censorship coordination with Big Tech, but true to form, its ruling had no teeth. As with affirmative action, the same policies will remain in place, only with a more convoluted and dishonest process.

The entire internet is essentially a top-down product. Every YouTube streamer, prominent website, social media account, best-selling Amazon author, or TikTok “influencer” has a podium only because he is allowed one. Every internet-driven cultural trend is therefore to some extent artificial. Social media is not a marketplace of ideas, but a command economy. White patriots, Christians, and many conservatives are banned, demonetized, or restricted, but it is almost unheard of for anti-white “Communists” or “anarchists” to face trouble unless they criticize Jews or Israel. This alone discredits progressive critiques of how power works in modern America.

Complaining about unfairness or hypocrisy does nothing, and the stakes are high because LLMs are “trained” on social media sites. For example, Reddit just struck a reported $60-million-a-year licensing deal to “train” Google’s AI. Reddit banned “racist” groups in 2015 and in 2020 banned an influential pro-Trump group with almost 800,000 members. Thus, any AI it “trains” will get answers that tell only one side of the story. Needless to say, openly anti-white groups and utterly depraved sexual content are still on the site.

AI has the potential to end online debate in a way that no other technology can. Meddling with search engines or banning people is bad enough, but the information is still there and can be found by a determined user. However, AI could replace many content creators, and will spit out answers based on its training. When AI becomes indispensable in education, business, customer service, or dealing with government, we face the prospect of an information dictatorship. Reality will still conflict with what AI feeds us, but with Western governments increasingly criminalizing

AI is being programmed to be anti-white. It is being done openly and on purpose. Censorship, deplatforming, and search engine manipulation may be nothing compared to what comes next. Our country has already been wounded by the government’s insistence that we subject our institutions to the fantastic, irrational, and utterly false faith of racial egalitarianism. What happens when we are all taught, entertained, and informed by a powerful system that has been told to deceive us?

Such a society could be not just tyrannical, but anti-human. If AI replaces communication, information, and creativity, we will lose the ability to think clearly about the world or even to know that any other kind of society ever existed.

AI ?

Attachment

Nah…..just Psaki trying to get her job back and look less like Zuckerberg and more like a DEI hire.

C;mon, GRB….my reply was at least a little humorous, no?

Now, here’s the real Jean-Pierre doing what she does best…lying her Bozo infested arse off:

https://twitter.com/i/status/1765427010367582379

The truth is she makes Psaki look like Mother Teresa